A Smart Speaker With ChatGPT, or, The Alexa We Were Promised

Anyone who has ever tried communicating with an Amazon Echo device will be able to attest that these “smart” speakers are, increasingly, anything but. I sheepishly confess that I actually have four Amazon Echo devices, the first two of which I got as a free promo for buying a cell phone in 2019. By and large, I use them mostly for music, radio, and the weather. You know, when the device understands me. Why can’t I use it for anything else? What happened with this technology? And why does it seem to have been getting worse, according to a number of sources? While no one seems to know what’s up with the cantankerous smart home assistant, one thing’s for damn sure, and that’s that whoever figures out how to integrate ChatGPT into a smart speaker might well deliver the Alexa that we were promised.

How Would That Work?

The particularly neat thing about ChatGPT is that it is quite portable through the use of a thing called an application programming interface, or API. The API is the component of the software that allows it to “talk” to other software. Remember when every website and phone application started to have that whole “create a new account slash login with Google or Facebook”? Or when Strava gives you the option to “post your ride on Facebook”? Those are good examples of the API at work.

Devices like Echo or an iPhone will usually offer product developers sets of tools to help them integrate their own software, like the Gosund app that I have that lets me control a few lights on “smart” outlets (after we figure out how to get smart speakers to work, we can talk to the Chinese about how to name pronounceable products with legible, real-life-sounding-kinda-names for the North American market). So, once we are able to get the API business figured out, we still have to train the model.

The “P” stands for “Pre-Trained.” Do we have to train it again?

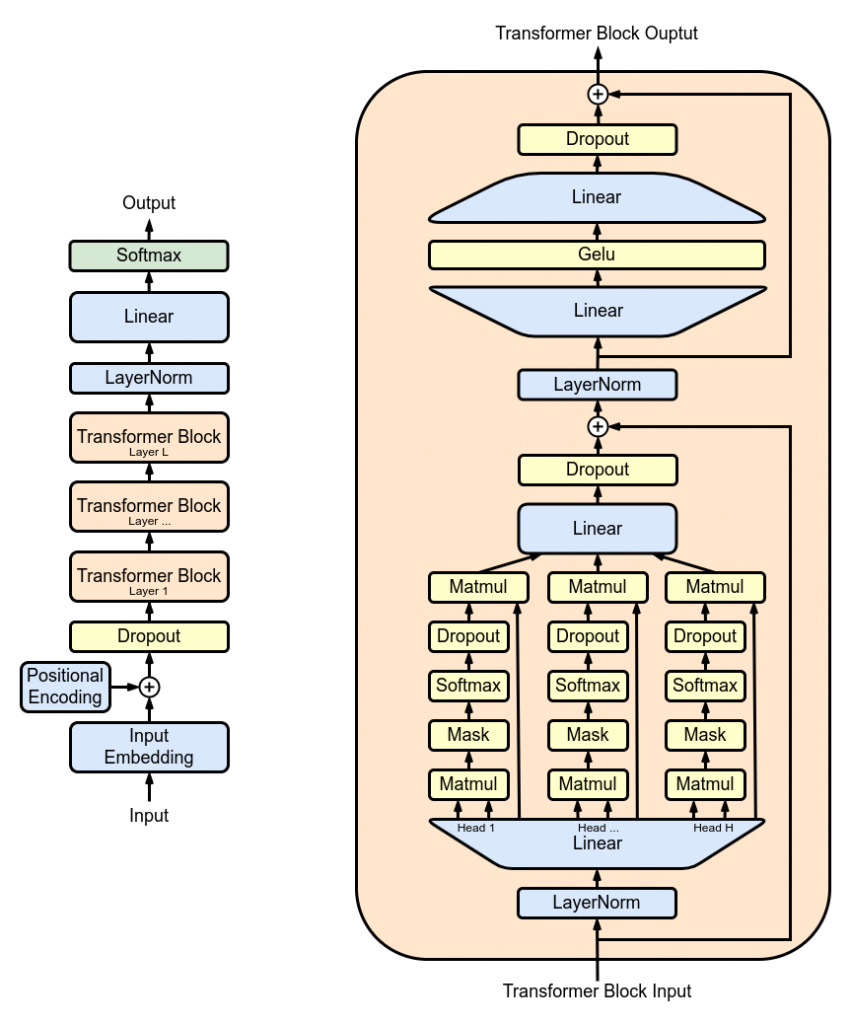

The idea of GPT is that the “P” stands for “pre-trained.” So, there are already hundreds of millions of parameters that have already been built into GPT 3, for example, and each new iteration is becoming ever more complex. But in the case of a smart speaker, we’re speaking to it out loud. It’s different from typing. Most English speakers will be able to type roughly the same query into ChatGPT. But those same English speakers might have completely different voices, which the software+hardware combo might not understand all the same way. This has been extensively studied in contexts where technology based on a “normal” medium end up being skewed toward, say, women’s voices, or white skin as opposed to darker skin (Google noted this when releasing its latest Pixel models).

The difference between ChatGPT understanding you and Alexa understanding you is that Alexa has to not only process responses to inquiries, it has to first translate what it hears before even inputting the query. Challenges to training the Alexa model– about which Amazon has been quite opaque except to say that it cost a ton of money and time- include the fact that everyone’s voice has different parameters, everyone has a slightly different vernacular and a slightly different accent, and, perhaps more obnoxiously for the system designers, the fact that a lot of queries are probably coming from noisy rooms, rooms with weird echoes.

Audio files are much larger than text files, of course, which poses a separate set of challenges, because it means that the data throughput to train a texty/mathy model like GPT would have to increase by some order of magnitude to achieve the same results with audio data. However, we’ve made a lot of progress in text-to-speech technology in recent decades, concomitant with the rise of much more efficient LLMS and ML models.

A Bit Longer Than The Next Two-Week Sprint

So, it’s inevitable that we’ll see this in the near future, and whoever develops that product might actually figure out how to deliver the true “smart home” stuff we were promised ever so many moons ago. I assume Amazon and Google are already on the case, and Samsung has already made the headlines for ridiculous stuff in the ChatGPT realm, so it’s probably going to be a competitive market, especially given the open-source-but-maybe-kinda-not nature of ChatGPT. A life-changing innovation overnight? Well, no– it’ll take years for these devices to actually work seamlessly with one another (another thing we were promised, but companies are more obsessed with scarcity mindsets, planned/forced obsolescence, and protecting their own platform even at the expense of expanding market opportunities). But that ChatGPT has even trounced Google in a lot of capabilities– plus its portability via API for a number of other applications- is an encouraging start.