Midjourney AI: Escaping Down The Rabbit Hole

In recent weeks, I’ve done a cannonball off the high-dive into the deep end of the world of what I might call “consumer-grade” artificial intelligence. It’s equal parts fascinating and escapist while still raising profound questions about what kind of rabbit hole we are about to dive down as a society. You’ve probably heard about ChatGPT, DALL-E, and Midjourney AI, whose generative software products are stirring the hearts and minds of men, as it were– as well as stirring plenty of controversy- across the globe. For ChatGPT, entering a simple prompt can result in either a full-length, quasi-academic essay, or, for DALL-E or Midjourney, a series of beautiful images, rendered almost instantaneously. Is it the greatest thing since the invention of the meme? Speaking of memes, meanwhile, Jean Baudrillard is turning in his grave– or is it actually Baudrillard, or a simulacrum of Baudrillard?!

AI, The New Tech Bubble

Artificial intelligence has blown up in recent years, and estimates figure that the sector is going to grow by leaps and bounds as a matter of market capitalization alone, to say nothing of the evolution of the technology itself. While I’ve followed the development of artificial intelligence for years, the phenomenon of AI that can generate coherent responses is relatively new. I think back to one of my favorite articles of all time, in which a researcher tried to get an AI to invent paint colors, which were all hilarious in a sort of, “I see what you were going for, but what the hell” kind of way. This was in 2017.

When the technology was substantially less polished, I remember being genuinely disturbed by one AI-generated image, which was a tweet of someone saying something to the effect of, “hey, look at all of this great stuff they were just throwing out at work!” It appeared at first glance– especially if you were just mindlessly scrolling through your feed- to be a tray of food, perhaps pastries or sandwiches. But if you looked closer, you realized that it wasn’t food at all. Nor was it really anything else. It was just a bunch of objects that a computer thought looked like, well, real things. I remember the thing, whatever it was, had a bunch of weird lighting artifacts in it. Sparkling cinnamon buns. I found this positively unsettling, although I’m not sure that the “uncanny valley” is meant to refer to inanimate objects.

In 2022, I started seeing truly wacky stuff produced by DALL-E, whose progenitors also invented ChatGPT. DALL-E quickly developed a reputation as being able to produce fun and quirky images, like one that I remember that was permutations of Columbo for various game consoles. To be clear, there is absolutely no purpose to this specifically, except the novelty value can teach us a great deal about how the technology works. None of these images really tell us much, but they’re also notably pretty primitive in comparison to how much the technology has developed in the year since I saved these. Beyond that, though? It’s fun!

I remember commenting at the time, “this is what the internet was made for!”

From “Accurate Enough” Imagery to Beautiful Art

Midjourney, in comparison to DALL-E (which I haven’t personally used), has a reputation for creating gorgeous and often extravagant imagery based on even simple prompts. This is a product of a highly sophisticated rendering engine, even if the platform kinda sucks at language in comparison to ChatGPT, which has pretty stellar natural language processing infrastructure. As a user, the distinction is only as relevant as your ability to use it.

These types of artificial intelligence analyze large sets of images. Human input guides the process of machine learning, so the AI will “guess” and the human will look through a series of things and say, “yes, that’s correct!” or, “no, that’s not a chocolate donut, it’s a turtle.” Just as with the paint color AI coming up with names like “snowbonk” or “stanky bean,” which at least resemble real words, sometimes the AI’s determinations are “close, but completely wrong.”

Midjourney delivers impressive results, and results requiring minimal effort, no less. I’ll save my critiques of it for a separate article, because it has some extremely annoying limitations. My current challenge is trying to understand what to do with it, and the technology has sparked a lot of ideas in my writing-brain. I could write a comic book, made by robots! I could illustrate my blog articles! Et cetera. (I’m still sticking with real stock photos for the time being, but I’m going to be using some Midjourney illustrations, just for fun!). Still, this raises a question of whether this would, in fact, be “my” art.

Understanding The Authorship Controversy

Clearly it wouldn’t be mine. Midjourney requires Creative Commons attribution for free trial products, but the ones you generate after that are fair game for, well, whatever. This is problematized by one major factor, which is that Midjourney and its human puppets have trained the AI model on real images. In other words, the images that it creates are at least “inspired” by real life images. And whose images are those?

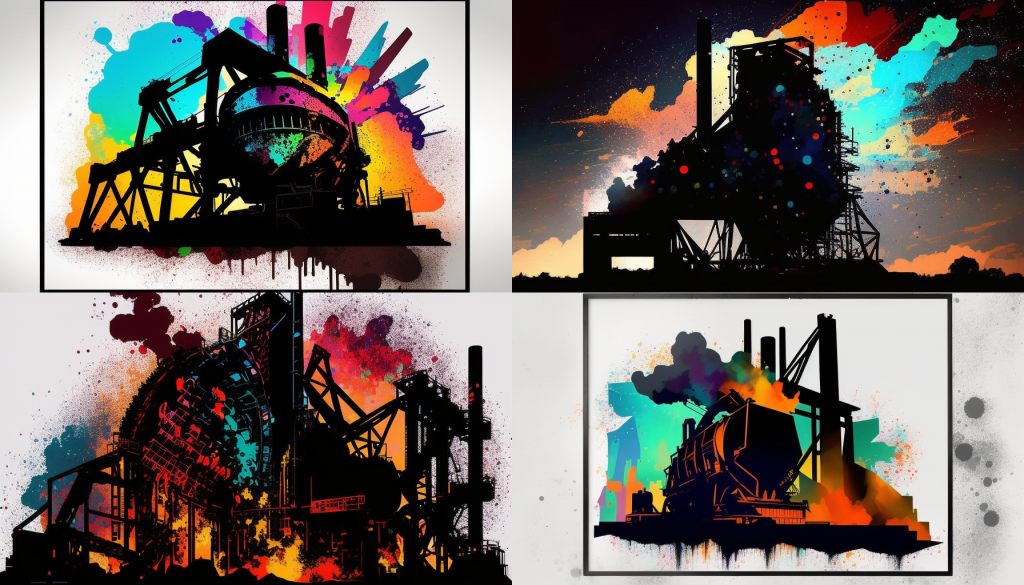

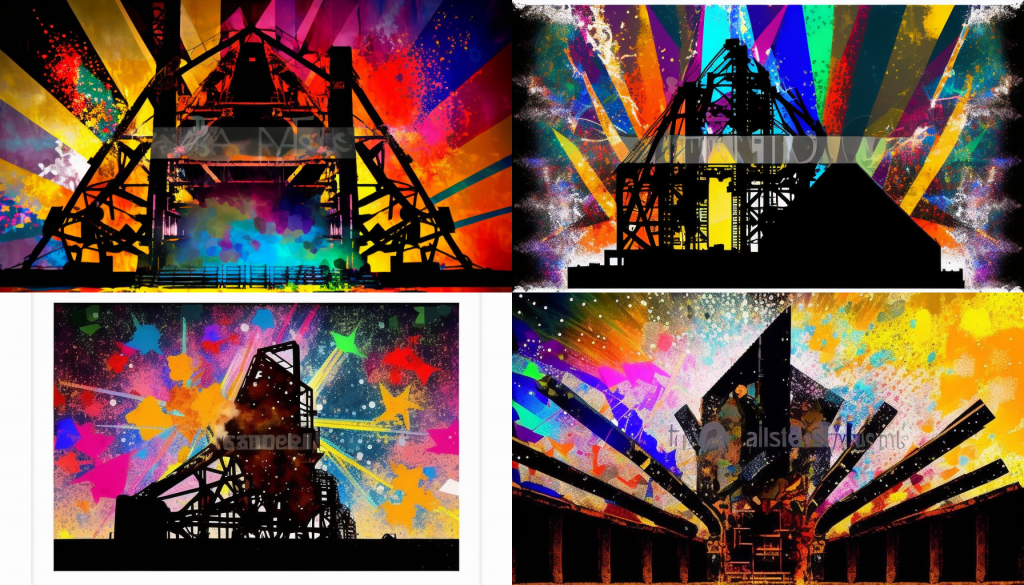

The controversy around the question of authorship can be specifically highlighted in this next set of images. Midjourney clearly “studied” some stock photos of steel mills and then just splotched some color on top of them. This is not a defect of the software per se, but rather, it represents a deficiency that future versions will have to figure out. The technical processes that would be required to edit out what show up below as watermarks are relatively simple, and involve determining what areas of the image have the watermark, and smoothing out the colors based on a determined target color or average. In the bottom images, it’d be very simple. The top images clearly have remnants of a watermark, but it’s perhaps less clear what should be done about it.

Does this mean that Midjourney “stole” images, say, from a stock image site, to train the model? Well, it’s not really clear! Those images are watermarked because they’re meant to be purchased for commercial or editorial use. That doesn’t mean that they can’t be referenced, though. Whether they can be referenced for commercial purposes is, as they say, a whole ‘nother question. The government, for their part, says these things can’t be copyrighted. For now, at least. Interestingly, in this landmark ruling, the copyright office didn’t strike down the entire copyright for the comic book that was the subject of the case– just the images generated by Midjourney.

Academic Interpretations: What Is “Is”? Whose “Is” Is This?

I am remembering my senior year in college, when I struggled to stay awake in a truly brutal art history seminar defined disproportionately by often masturbatory reflections on the nature of being by dead French academics. The professor hated me and I did not do well. I found most of the syllabus thoroughly annoying at the time and I now spend a lot of time thinking about it a decade and some later.

A lot of Jean Baudrillard’s ideas, for example– groundbreaking at the time, and still vital to a lot of modern Western discourse- were around not only the question of authorship, but indeed, the very nature of authorship itself. Baudrillard was interested in the implications of mass media and mass reproduction for the very ideas of “meaning” or even being itself.

What is an “original” work? Is it still an original work if it’s been substantially altered? This is something that has dogged art historians for decades. Let’s say a painting is substantially damaged in a fire or a flood. Does the restoration make it a “new” painting, or is it still the original? There’s not really a conclusive answer to this question, save the consensus that Rowan Atkinson’s “restoration” of Arrangement in Grey and Black No.1, a.k.a. Whistler’s Mother, in the 1997 film Bean, is not the same as the original.

Originality: In Pixels Or In Brick

I confronted a similar question when I was working on Matterport imaging for Ford Motor Company’s Michigan Central Station project. I had remarked to the architect that it was a shame they were going to completely strip out some lurid graffiti in the former restaurant of the station. The discussion at that time had focused on the question of whether the graffiti was a blemish on the original architecture, but also whether the graffiti didn’t represent an epoch of the building’s history in and of itself.

Anyone who has ever been to the Reichstag in Germany will appreciate this, as the 1990s restoration of the former seat of government, disused since 1945, left a great deal of bullet holes and Russian graffiti from teenage soldiers scrawled into the stone. Some of the less G-rated graffiti were removed (not that the average German or non-Russian tourist visiting the building speaks a word of Russian anyway), but the fact that so much of it has remained intact is fascinating both from historiographic standpoints as well as from aesthetic ones.

Fascinatingly, a stone’s throw from the Reichstag, was the former Palast Der Republik, the seat of East German government– which was built on the site of a former Prussian palace built a trillion years ago. Largely to signify the ultimate conquering of the former East Germany by the capitalist West, technocrats ordered the Palast demolished in 2009, and rebuilt the Stadtschloss in its place. Now, it’s a charming ode to the history of the city! Totally the same as if it had been the original structure from circa 1700, right?

I mention these because we have a lot of debates– not just in the architecture world, the historic preservation world, or the AI world, but in most corners of society these days- about the question of authenticity, as well as the question of ownership. In city planning world, the claim to the city or identity as a resident thereof is constantly contested. Community For Whomst? as Alex Baca once said. “Whose streets? Our streets,” protesters chanted in Ferguson, St. Louis, Chicago, Detroit. Cops later chanted the same thing. (The irony of cops chanting this about a city they don’t live in is a subject for another day!)

Honing The Model: Toward Product (If Not Toward Resolving These Questions)

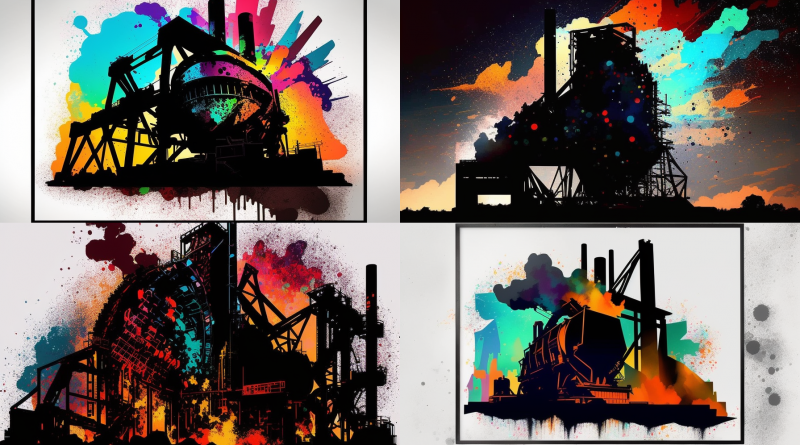

The steel mill project had a few iterations, and with each one, I learned more about the process of prompt engineering.

The next batch here notably lack the watermarks. It’s not clear to me why Midjourney made two of these look like gallery works. Of course, these don’t look as much like steel mills per se. Industrial? Yes. But steel mills? I dunno. This is a good example of some of the limitations of the technology based on the prompt structure. (My co-conspirator informs me that it’s possible to actively exclude watermarks and text, although I am wondering how this might restrict the product based on the images the AI has studied!).

Anyway, is this the greatest thing ever? No, but it’s pretty darn neat! Am I still thinking about clever ideas for use cases and the Handbuilt City comic book written by AI? I mean, sure. Am I obsessed, though? Definitely. More coming on this subject in the coming days.