I asked Midjourney To Imagine The Ideal City. It Was… Weird.

Shortly after beginning my foray into the world of Midjourney AI, I came to a not terribly surprising revelation: A lot of the art being generated at the entry-level seems to be just minimally-imaginative entertainment for internet nerds and probably teenaged boys. In the “newbie” Discord channels in which all new Midjourneyers must start out their midjourneys, there’s a lot of stuff that just screams teenage angst– most likely that of the nation’s masses of unwashed, sweaty high school boys, imagining large-chested anime women or teched out cyberpunk girlfriends to match their RGB keyboard mat. But beyond that, there are a lot of genuinely clever prompts whose writers seem to be trying to use AI to help them imagine beyond, which is always something that can be appreciated by someone like me, who believes we can always do better as a society. This led to an interesting line of questioning: What would happen if I tried to let the AI “imagine” the idealized built environment? And what training does the AI have that leads it to normatively imagine the built environment?

From More Primitive Beginnings in More Primitive Graphics

I wanted to start with a fanciful but graphically simpler context instead of starting off trying to build a cogent, realistic portrayal of some kind of world. And, having grown up in the glory days of console gaming, I have a deep nostalgia for SNES, JRPGs, and what we now consider the more primitive art of the computer world (no less in an era when robots can generate– well, you’ll see in a minute!). I wanted to see if Midjourney was going to go on this– ahem- midjourney with me, by helping me imagine what a sustainable, multimodal urban planning millieu would look like– in 1990s SNES graphics. I figured that this lowered the bar for beauty, but I was very curious to see what it would come up with.

If you saw the below images and heard this in your head, well, you’re not alone!

Wearing my city planner hat– especially a city planner who has spent years doodling in graphing paper notebooks (if you didn’t know this about me already, I’m sure it’s probably the least surprising information you’ve received, possibly ever)- perhaps the first thing I’ve noticed about Midjourney’s imagination of the city is that the AI fundamentally does not understand the spatial parameters of streets. It will create intersections that don’t look like real intersections. Sidewalks will go through a building rather than in front of it. Sidewalks sometimes have parking lots between them and the street.

AI is notoriously bad at human hands (though, as the Beatles said, it’s getting better all the time), but this is perhaps more understandable because of how complex and nuanced the geometry of a human hand is. In contrast, streets exist on some sort of grid– or, at the least, some system of legibly connected nodes.

In fact, there seems to be an awful lot of paved area in general! We wonder whether that’s perhaps a product of a society whose built environment is so inextricably dependent upon the automobile. But then this raised another question: why, then, does it seem to get things like the buildings right? Buildings are much more complicated than streets and sidewalks, aren’t they?

Why Buildings, But Not Streets?

“Of course,” you might say. “It’s a damn robot. Why would it understand how streets work?”

If you look at the buildings created in Midjourney, it’s apparent that the AI has been extensively trained on images of buildings, whether houses, streetscapes, or large institutional buildings. I have especially enjoyed trying to see what kinds of things it can come up with that just blur all kinds of lines. Indo-Saracenic greenhouse Wal-Mart in a strip mall? Gothic beach house in Biarritz? Art nouveau mansion in Wicker Park? Why not any of these? Go nuts. This is the kind of thing that this platform thrives with– almost as though the AI itself “enjoys” creating bizarre, outlandish realities.

When it does, it usually gets most of the architectural details about right. Sometimes it blends styles in a way that a human might not do, but in a way that still kinda works. It’ll create a building with a special façade that fronts on one street but not the side street, or vice versa. (There is a fun example of this below). This is approximately correct! I suspected– based on a limited knowledge of how AI development works- that this involved a lot more in the way of the human operators training the AI by using images of buildings rather than buildings of intersections. This is one possibility. Another is that there simply aren’t as many photos or illustrations of streets and intersections for the AI to analyze.

I noticed similar challenges using DALL-E to try the same kind of thing.

After the AI model is built, it must be “trained”– a process in which human operators monitor inputs and outputs and make corrections to the inner workings of the model to ensure that the AI knows that something is a handgun and not a turtle, or vice versa. Because buildings are often the subjects of photos or illustrations, they provide good material to train the model.

But where there are fewer photos, and certainly far fewer illustrations, of something like well-designed bike lanes, this is where the AI fundamentally doesn’t “get” the idea of streets intersecting with one another, while being criss-crossed with crosswalks or bridges, while also being flanked by grade-separated bikeways or sidewalks– or what have you. In the above gallery, you can especially note this in the images on the top left. The middle one (lower-left corner image) also features what looks like a street that dead-ends into a bike lane.

Impressively, Midjourney has gotten the bollards right. The trees are perfect. There’s even a streetlamp. But the grids aren’t quite right! Again in the center collage (top left corner), we have an almost-plausible image of an autumnal street scene in the warm colors of sunset. But the stripes, which clearly are based on crosswalks, go across what looks like the sidewalk, and there are two street trees in the middle of whatever this pathway is. There’s then another streety substrate. It seems that this secondary, tertiary, quaternary, whatever surface, is meant to actually be for the bicycle (pictured).

And to top all of that off, the space behind the tree in the middle appears to be… again, a parking lot.

I asked ChatGPT how an AI model would do so well with architectural typologies but not with street typologies. The competing AI told me that:

[the] complexity of urban design and planning extends beyond simple visual patterns, and encompasses a wide range of factors, such as zoning regulations, land use policies, transportation systems, and community engagement. While an AI may be able to recognize certain visual patterns in urban design, it may struggle to fully understand the social, economic, and political factors that shape urban environments.

ChatGPT, to the Author, March 10, 2023

This is an interesting response. But, as with many more things from the artificial intelligence, it doesn’t really make sense. Because the land use policies and community engagement have nothing to do with the sort of layer cake that makes up the average street typology. You start with a base– asphalt or concrete- and you add curbs, and then you add grass. These are fairly straightforward spatial concepts that seem to elude the AI, which, in contrast, has no problem creating a realistic face of a human child with freckles, or an anthropomorphic rat wearing a Christmas sweater, because why not.

It may makes a bit more sense when you consider that buildings all have a lot of common elements, while they occupy lots, parcels of land, which are fixed in space in a city. Compare that with street grids. Streets are kinda fixed in space in a city, but they criss-cross. They involve complex layers. A crosswalk, for example, is a distinct demarcation that represents a pathway across an existing street. But it’s not really its own specific medium of transportation. It’s just paint in most cases. Sometimes a crosswalk will be raised. Other times it will have those little signs in the middle. None of these are terribly easy for a robot to understand without specific training.

An AI “looking” at objects in an urban streetscape may struggle to differentiate bollards from trees from humans, at the most basic level, perhaps similar to how self-driving autonomous vehicle technology in Australia had a really hard time figuring out what a kangaroo actually was (this was from my thesis research and it’s fascinating).

I had a similar catastrophe trying to get the AI to help me visualize the idea of a “dig once” policy. Given different permutations from a prompt for an isometric illustration, the AI would come up with tangled up tubes buried underneath a street, also in the company of a cave and a waterfall. In one of these images, the cave with the pool in it was filled with garbage– all in an elegant little isometric illustration! I’ve learned a lot more about prompt engineering since I tried these, but it’s also notoriously challenging to specify details that have to be layered in a specific way.

How could the AI know what something looked like that is easily defined in text, but physically buried, so much harder to define in terms of aesthetics or space? This is one of the challenges in the training model. Midjourney is fundamentally limited in that it is only able to “imagine” things for which it has a really solid and well-trained reference point. It apparently lacks the internal logic to devise an aesthetic representation of an accurate street grid.

That’s one idea. Another is that it’s studied a lot of pictures of suburbia, which features the parking lots that it seems to think are always isolated from the road using a sidewalk or bike lane. This is something I think about when I consider how many photos I see for my job of small businesses– and they are almost all located in strip malls. If Midjourney had studied just the companies that my job is usually dealing with, I can now see why the imagination might be so weird.

Has Midjourney Ever Been To Detroit?

For the next series, I wanted to try referencing a real place. I started with the prompt, “wide angle 22mm hyperrealistic, photorealistic, construction crew digging up street in modern-day Detroit, historic buildings, old hotel, office buildings, sunshine, –ar 16:9”. This gives it a wider angle aspect ratio than the normal block. I sometimes throw in additional terms to try and get it to use them. Sometimes it does, and sometimes it doesn’t. When it doesn’t use the terms I add, I suspect it’s because whatever image has been “imagined” by the AI in draft format is somehow categorically opposed to one or multiple of the terms I’ve thrown in. It’s a neat trick in Midjourney prompt engineering to be able to confound the inputs in a way that creates a clever, unexpected result. So here’s one of those basically-correct façades with a very strange but pleasingly hi-tech looking bulldozer in the middle of the street.

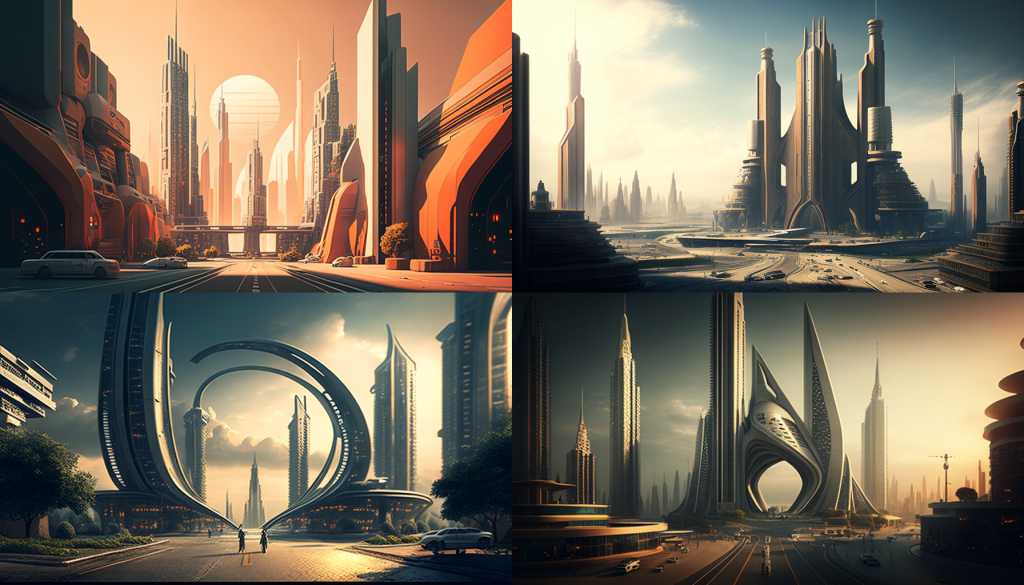

I then figured, what if I just asked Midjourney to imagine the ideal city? I would allow the robot to determine what that meant. But I wanted to start off with some kind of terms to make it look more cinematic, right?

The more futuristic interpretations come from omitting the references to cinema. Midjourney often associates cinema with grandeur, appropriately, but also quite often, it seems, with darkness. (Darkness!). It’s not clear to me exactly what is gyrating inside the algorithmic innerds of the artificial intelligence model with this one, but I’d assume that the model was trained using a lot of Marvel films, or what have you. There’s a ton of stuff that just looks like a straight up Batman movie.

This then leads me to wonder what other biases the model maintains. It seems to competently manage producing imagery for different cultural paradigms, whether you’re thinking about indigenous peoples of North America, Black Americans, or even the likes of one particularly bizarre thread I saw in the Newbie chat about a quite rotund Chilean soldier marching alongside a tank in the mountains.

I get very frustrated with how many people– whether human artists or generative AI models- seem to imagine the ideal sustainable city as just Space Rivendell (from Lord of the Rings). Everything is pristine. There are waterfalls and towers with the masonry equivalent of bargeboard– frilly ornaments highlighting the natural beauty, yada yada yada. I guess Rivendell is iconic, but it doesn’t really tell us much about an “ideal” city.

These are pretty, but they’re super skyscraper-centric and they involve these monumental interpretations of space that just reek of heteropatriarchal science fiction. For my part, I’ve always said that the postapocalypse is going to look less like Reign of Fire (2002) or The Last of Us (2023) and more like the Heidelberg Project.

I wanted to double down on my previous line of questioning to the robot. So, I wrote a brief treatise in which I asked ChatGPT to explain why AI models would create these bifurcations that inevitably resulted in completely unrealistic images of an “idealized” city. I specifically wanted to understand how it is that we imagine idealized imagery of famous– and famously walkable, famously dense- neighborhoods like Brooklyn Heights in New York, or Montmartre in Paris, but still come up with these space-age, Rivendell-ass towers of green trees.

Chat explained that it’s perhaps not just about how the AI model was trained, but about what the average person includes in the very idea of idealism or aspirationalism:

One potential explanation for the preponderance of high-density, space-age images of ideal cities is that these types of images are often used in popular media, marketing materials, and architectural renderings to convey a futuristic or aspirational vision of urban life. This can result in a cultural bias towards these types of images, and a relative lack of representation of more human-scaled, traditional urban environments […] this bias towards futuristic, high-density images may also be a result of the types of images that are included in the datasets used to train AI models. If the majority of images that the AI is exposed to depict high-density, space-age cities, then it is likely that the AI will generate similar images in response to prompts about ideal cities.

ChatGPT, to the author, March 11

The “futuristic or aspirational vision of urban life” is the operative question here. However, upon feeding Midjourney prompts that attached specific terms to what that idealized neighborhood might actually look like in human terms, it came up with a few ideas that were minimally reliant on the motorcar. It didn’t seem to understand my limitation of “buildings that are less than five stories tall,” and this is a frustration I’ve noted from working with Midjourney– it can be really bad at responding to specific details. I’m still learning, though, and I hadn’t yet learned about weights of components of a prompt when I started this article!

Another question I was curious about was why all of the images of “idealized” cities never actually produced anything vaguely resembling the dominant paradigm of development in the United States, which is dually car-centric and low-density (suburban and exurban). Chat tactfully explained to me that, well, that’s kinda the point, isn’t it?

ChatGPT, Confirmed NUMTOT?

One thing was that Chat suggested being more specific if there were things I wanted out of the image. This was, of course, the point of my experiment– to try and see what happened if I wasn’t specific, since I wanted to see what the robot would do with terms like “ideal,” “idealized,” “paradise,” “futuristic,” “clean,” etc. When I did get more specific, Midjourney delivered. Sort of, at least– Midjourney at least understood the meaning of things like “multimodal transportation.” When I turned up the “chaos” parameter, things got absolutely wild. The AI had scoured all of the junky renderings done by the office intern for your state department of transportation’s new multimodal corridor.

So, I give you the Trevor Pawl Memorial Collage below:

This last collage is, well, utterly atrocious. But the images do look so similar to the kinds of things that they put together for these dumb suburban pork projects like Innovate Mound. A helicopter-train-drone that seems to be carrying little ATVs?

Preliminary Conclusions: No One, Including The Robots, Like Car Culture?

Perhaps the most interesting commentary ChatGPT gave me on the subject was almost a musing on the question of why so much imagery of these idealized cities and idealized human environments featured few, if any, automobiles, regardless of what language I put in. Whether we’re looking at a distant future eco-utopia or a present-day neighborhood, one thing that seems to always be missing is, say, a long line of oversized SUVs. Midjourney doesn’t imagine, for example, TxDOT’s nauseating $85 billion highway expansion plan.

You might say, “of course it doesn’t, because you’re entering these commie pinko prompts!”

But I’m not, as I showed above. ChatGPT had an interesting “theory” as to why this was happening:

…the absence of automobiles in futuristic city images may reflect a desire to create more sustainable, human-centered urban environments that prioritize the needs of people over the needs of cars. By reducing the presence of cars in urban environments, it may be possible to create more livable, healthy, and equitable cities that prioritize public space, social interaction, and community engagement. [However, the] absence of cars […] may also reflect a certain utopian idealism that may not be fully achievable in the near term. While it is important to work towards reducing the dominance of cars in urban environments, it may be unrealistic to expect that they will be eliminated entirely in the near future. As such, it is important to balance the desire for more sustainable and livable urban environments with the practical realities of contemporary urban transportation systems.

ChatGPT to the author, March 10, 2023

That ending is a bit wordy and characteristically useless (Chat often ends with “while [x], it’s still important to remember that [y],” even when that’s a redundant thing to say for a seventh time), but it’s way better than the odd interpretation it offered previously, that roads are hard for the AI to imagine because the systems that build roads are complicated.

Realizing that this isn’t a scientific study, I’m still interested to see what else I can extract out of Midjourney, and I’d love to follow up on this article after another few months of messing with it. One thing I’ve definitely established is that the most beautiful renderings, and certainly the most interesting ones, are not creating hellscapes of suburban strip malls and freeway interchanges. It’s not that the AI associates things as being “bad,” they’re just so completely off the radar of aesthetics because even the robots recognize that this is antithetical to the ideal, futuristic, clean, sustainable, livable urban environment. If the robots can figure it out, maybe they can convince our state’s department of transportation?